January 30, 2026

Article

10x the Coding Workflow with Antigravity

Software delivery is increasingly constrained not by writing code, but by executing work end to end. Planning, validation, testing, and review now consume a significant share of engineering effort. A developer survey shows that engineers spend only about one-third of their time writing or modifying code, highlighting a structural productivity limitation that editor-level assistance cannot address.

AI-assisted development tools have evolved from autocomplete to conversational systems capable of generating and refactoring code. However, most remain suggestion-driven and do not execute or validate complete development tasks.

Antigravity adopts an execution oriented model in which work is defined as explicitly scoped tasks that an agent plans, implements, validates, and summarizes through structured artifacts. The agent capabilities are powered by Gemini 3 Pro, whose benchmark performance includes 76.2 percent on SWE bench Verified for end to end issue resolution and 54.2 percent on Terminal Bench 2.0 for reliable multi step tool and command execution.

This article examines Antigravity in practice and outlines how this execution model improves developer productivity through structured tasks and artifact-driven review.

Source: https://www.sonarsource.com/blog/how-much-time-do-developers-spend-actually-writing-code/

Delivering a Complete Feature Using a Single Antigravity Agent

To understand how Antigravity accelerates day-to-day development work, this section walks through the delivery of a complete backend feature using a single agent. The goal is not to showcase code generation, but to demonstrate how task-level execution compresses the planning, implementation, validation, and review cycle into a single, repeatable workflow.

Feature

The feature adds rate limiting to the POST /api/orders endpoint. It enforces a fixed request limit per IP address, returns a structured error response when the limit is exceeded, and exposes remaining quota through response headers. This represents a typical production change that normally spans implementation, testing, and review.

Files in Scope

The task is deliberately constrained to a small set of files to minimize coordination overhead and avoid unintended changes:

src/api/orders.tssrc/lib/rateLimit.tstests/orders.test.ts

A working implementation of this feature is available in the accompanying GitHub repository for reference.

Task Definition

Instead of informal instructions, the feature is expressed as an executable task with explicit scope, rules, and verification criteria. This replaces back-and-forth clarification with a single, unambiguous input to the agent.

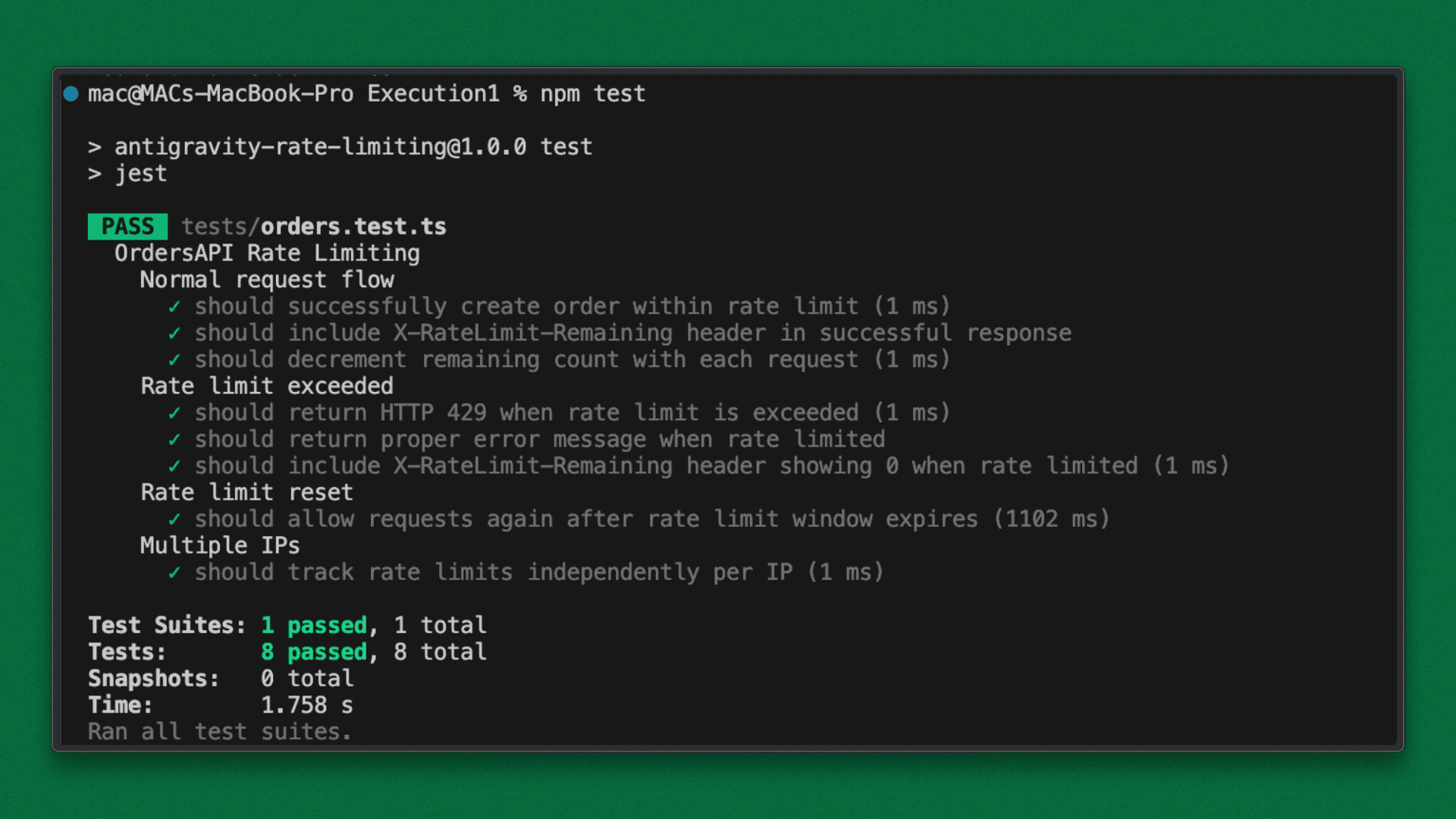

Execution

On execution, the agent produced a plan, applied the changes within the defined scope, and updated the tests.

A mismatch between intent and implementation was immediately visible through the artifacts, allowing the task to be re-run with a clarified rule and corrected on the second pass.

The final result was a complete, test-verified feature delivered with minimal iteration and no manual debugging. By collapsing planning, implementation, validation, and review into a single task-driven loop, Antigravity significantly reduces the time and coordination typically required to ship production changes, demonstrating how agent-based execution directly contributes to a 10× coding workflow.

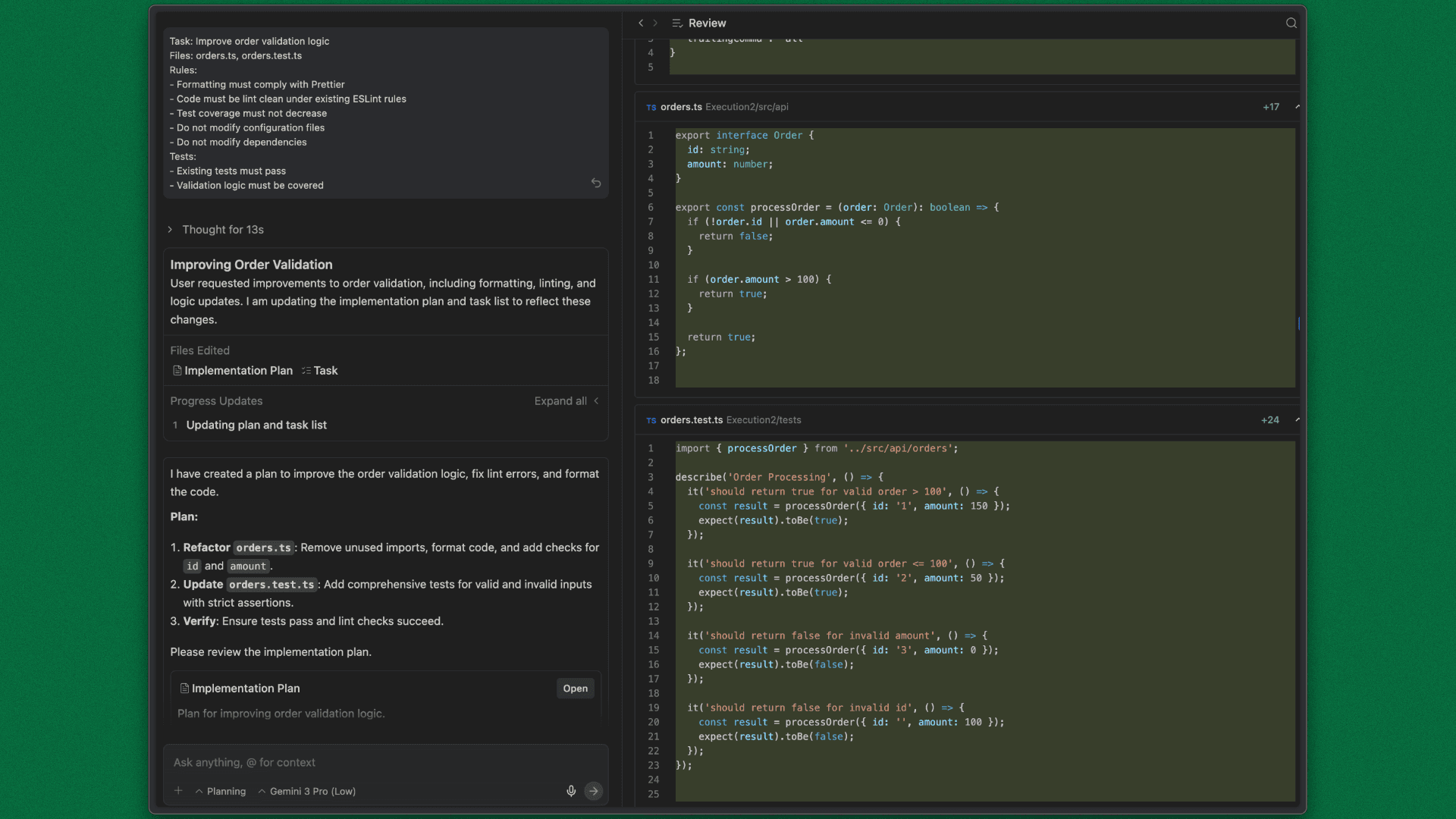

Making Formatting and Linting Mandatory with Agent Rules

Formatting, linting, and test coverage are often treated as post-execution concerns when working with AI generated code. Without explicit constraints, generated changes may satisfy functional requirements while diverging from established project standards, increasing review effort.

Antigravity allows these standards to be enforced directly through agent rules, constraining how tasks are executed rather than relying on post-execution review.

Task Prompt

Execution

Here is the repo. When executed under explicit formatting, linting, and coverage rules, the agent adapted its behavior during execution. It reformatted the implementation, removed lint violations, and strengthened test assertions before completing the task, without modifying configuration or dependencies.

The rule-constrained execution produced a standards-compliant diff without manual intervention or post hoc cleanup. By enforcing formatting and linting as executable constraints, Antigravity reduces review overhead and ensures consistent adherence to project quality standards.

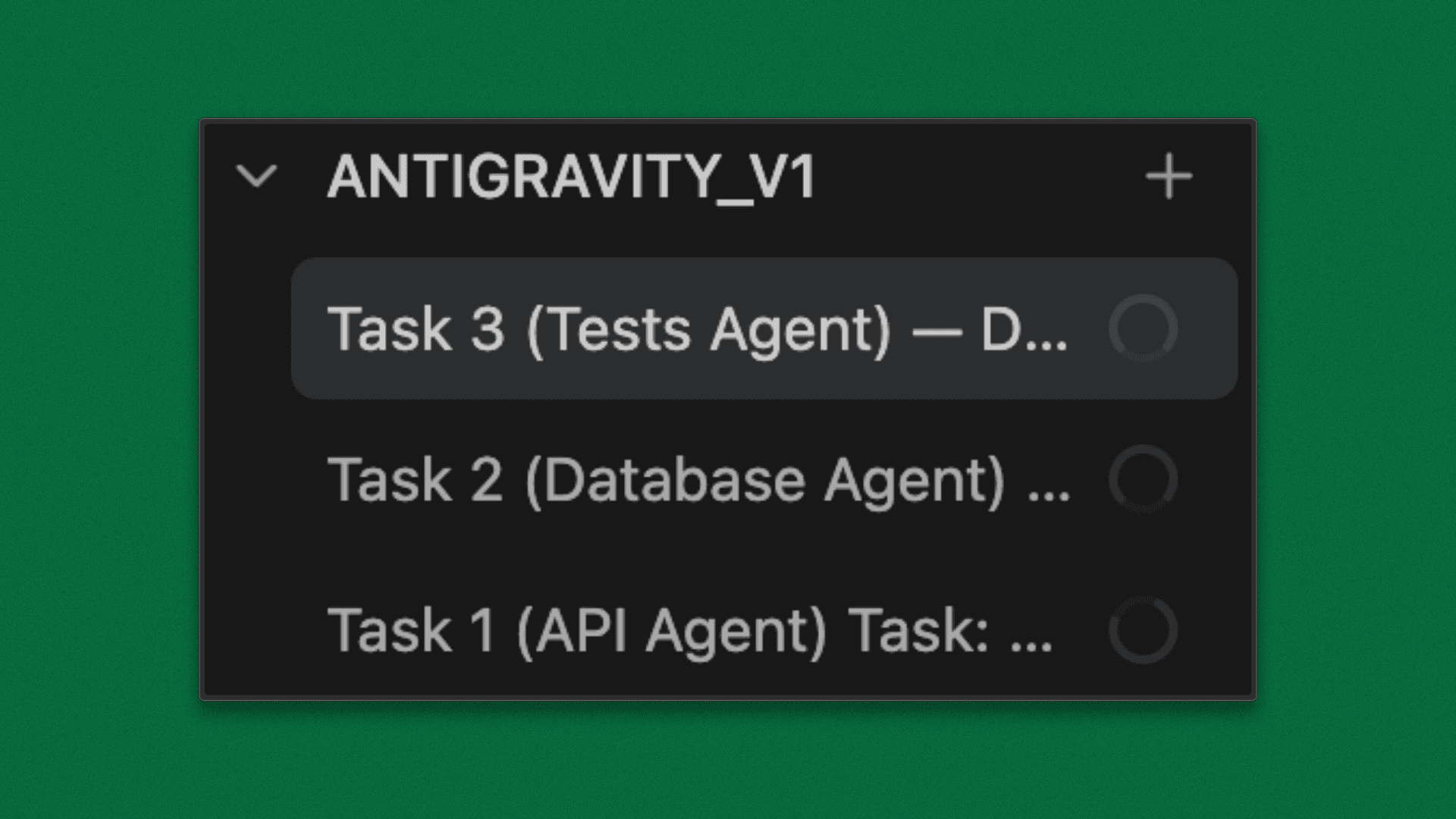

Splitting a Feature Across Multiple Agents

When a feature spans multiple layers of the system, sequential execution can introduce unnecessary delays. Antigravity allows such work to be decomposed into independent tasks and executed in parallel, provided file boundaries and responsibilities are clearly defined.

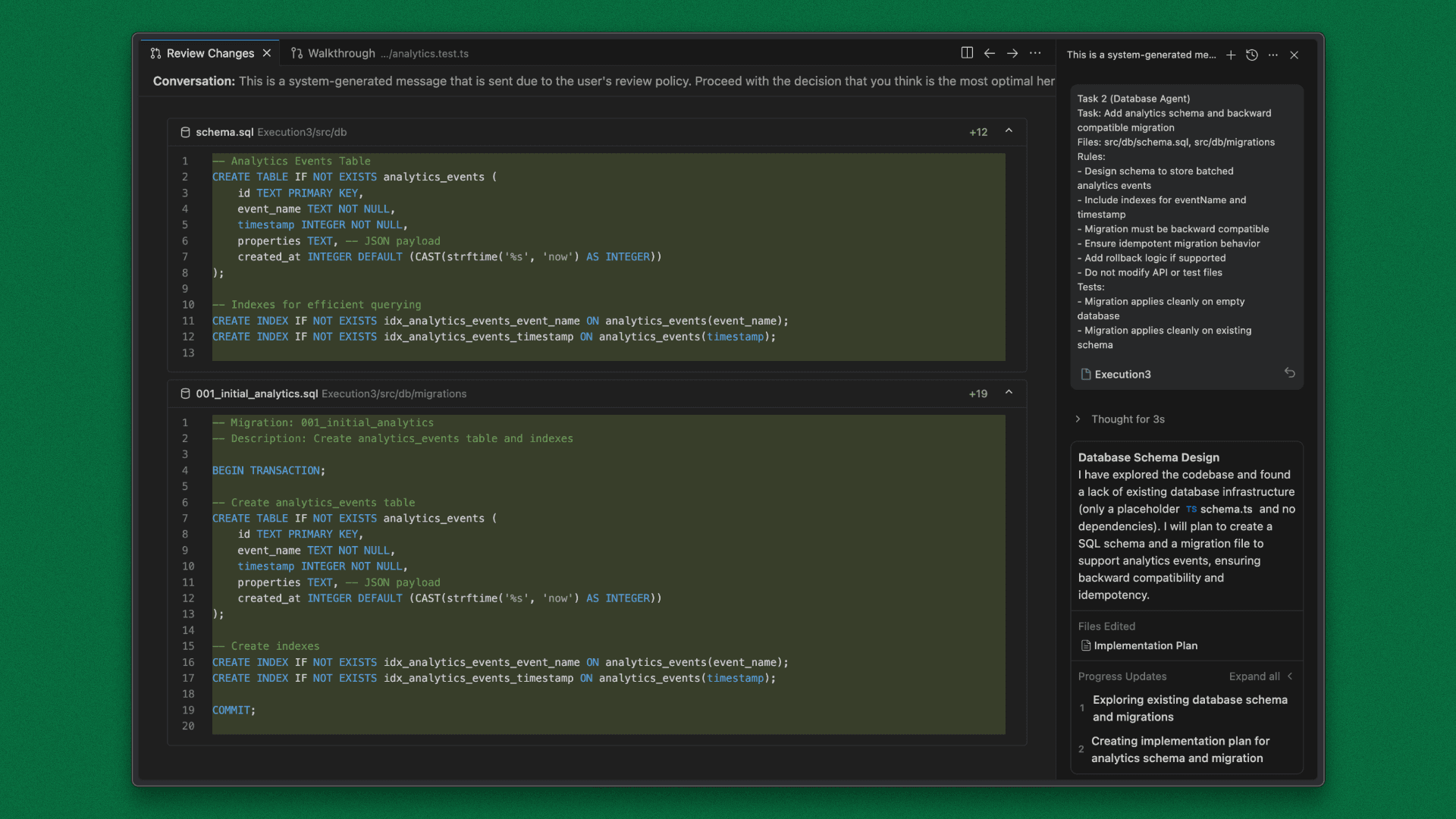

Feature

Add analytics support across the API, persistence layer, and test suite. The feature requires new endpoints for event ingestion, schema changes to store analytics data, and corresponding test coverage.

Task Prompts

The feature was split into three executable tasks, each scoped to a distinct responsibility and file set.

Task 1: API Endpoints

Task 2: Database Schema and Migration

Task 3: Tests

Execution

This is the repo that contains the execution of all 3 agents.

All three tasks were executed concurrently. Two agents operated on completely separate files and progressed without interference. One overlap occurred in the schema definition, where both the schema and migration touched the same file, and was resolved during the merge.

Because each agent was constrained to a well-defined scope, changes remained localized and predictable. Review focused on validating each diff independently rather than reasoning about interleaved changes across the system.

Outcome

After merging the outputs, the combined diff represented the complete analytics feature. Parallel execution reduced overall delivery time while preserving control over scope and correctness, demonstrating how multi-agent workflows can accelerate feature development when responsibilities are clearly partitioned.

Reviewing Changes Through Artifacts Instead of Logs

Each Antigravity task produces a fixed set of structured artifacts that separate intent, implementation, and execution mechanics:

Plan describing the intended sequence of changes, constraints, and design decisions

Diff showing the exact code modifications applied to the repository

Logs capturing tool execution, command output, and runtime details

This separation enables a predictable and efficient review process. Instead of starting from logs or attempting to reconstruct execution steps, reviewers begin by validating intent and outcome directly through the plan and the resulting diff.

The review workflow typically follows a small number of explicit steps:

Read the plan to understand the scope of the task, constraints, and the changes the agent intends to make.

Inspect the diff to verify that the implementation matches the stated intent and respects declared rules and file boundaries.

Consult logs only when the plan and diff diverge or when execution details are required for diagnosis.

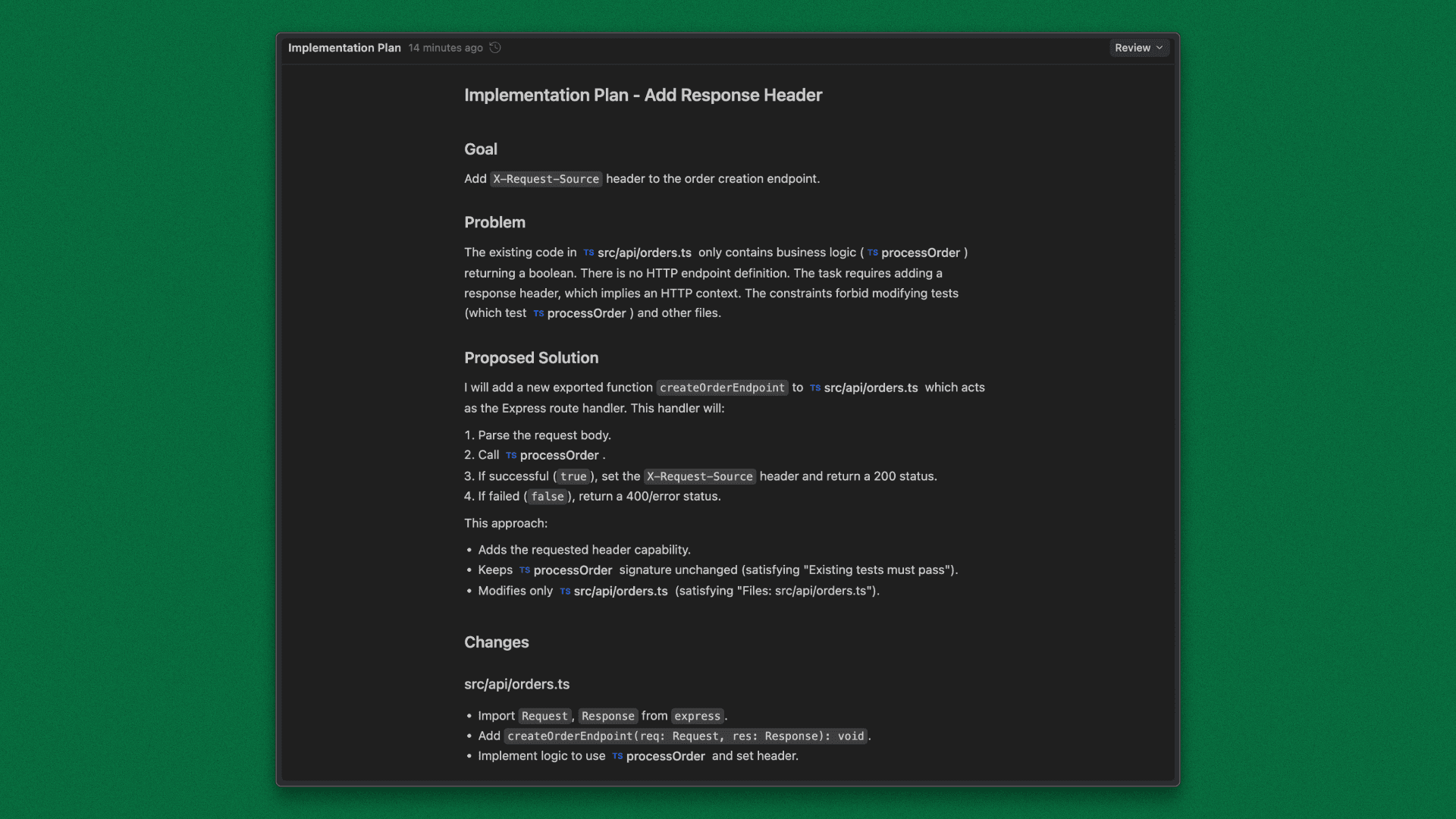

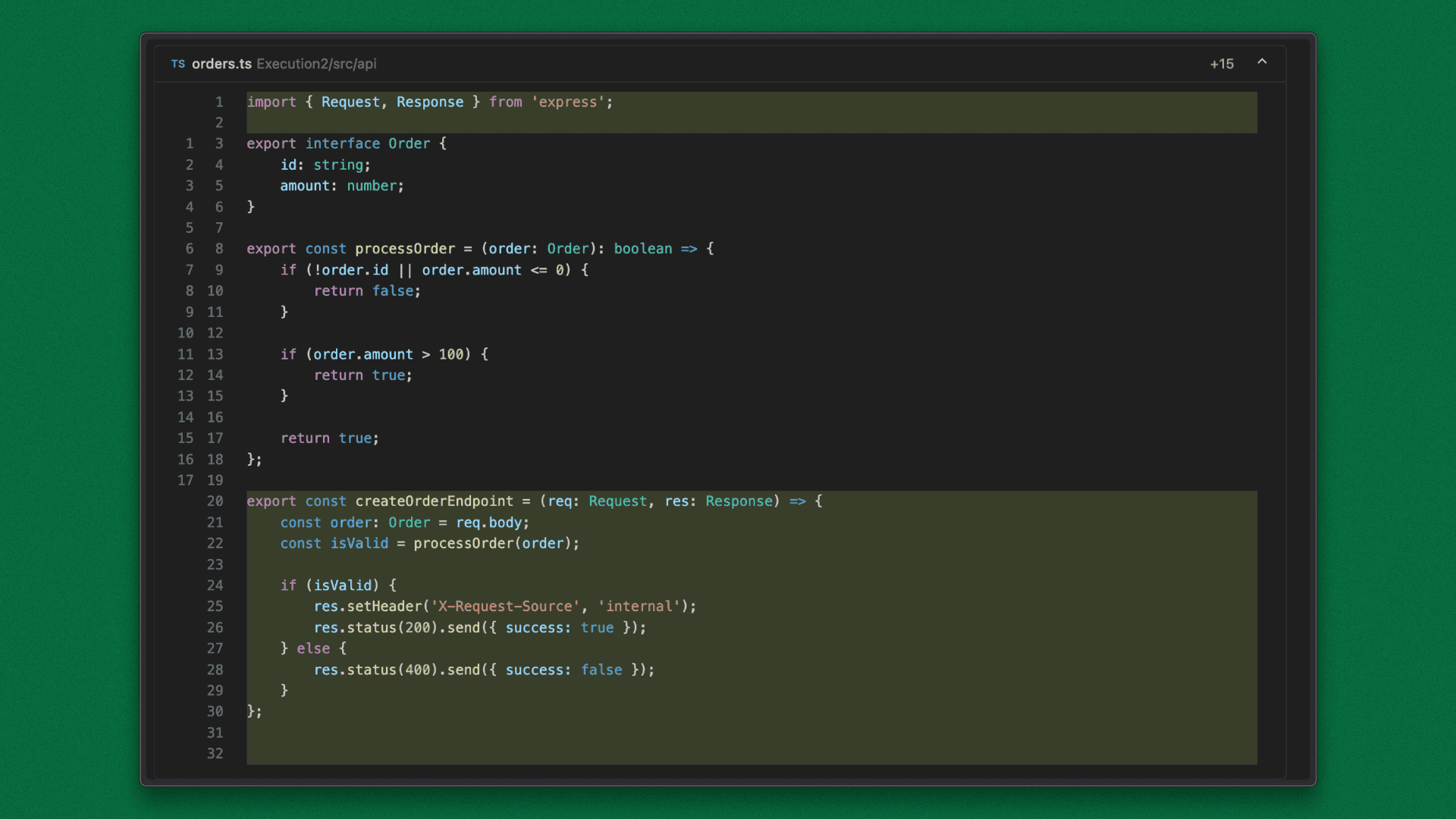

Example: Plan–Diff Mismatch Visible at Review Time

In one execution, a task required adding an X-Request-Source response header to an order creation endpoint under specific conditions, while restricting changes to a single file and disallowing modifications to authentication logic or tests.

The generated plan described how the header would be added in accordance with these constraints.

However, inspection of the resulting diff revealed that the header was applied unconditionally, diverging from the conditional behavior described in the plan.

This discrepancy was immediately visible by comparing the plan and the diff alone. No logs were inspected, no tests were rerun, and no execution steps needed to be reconstructed. The issue surfaced during review purely through artifact comparison.

For reviewers, this model shifts effort away from debugging execution and toward validating correctness, scope, and design intent. Artifact-based review reduces cognitive load, shortens feedback cycles, and makes each task auditable by explicitly linking what was planned, what was changed, and how the change was executed.

Controlling Context and Token Usage Through Context Engineering

As codebases grow, the dominant failure mode for agent-driven development is not incorrect logic, but uncontrolled context. When agents are provided with broad repository access or large unfiltered memory inputs, they attempt to reconcile multiple patterns, abstractions, and historical decisions simultaneously. The result is predictable: unnecessary imports, pattern drift, oversized diffs, and inflated token usage.

This behavior is not specific to Antigravity. It reflects a fundamental property of large language models: attention is distributed across all provided input. As irrelevant context increases, the signal associated with the task decreases.

Why Context Engineering Must Be External to the Task

Task-level scoping alone is insufficient at scale. Without an external context system, each task must repeatedly encode architectural decisions, conventions, exclusions, and historical constraints. This leads to prompt inflation, duplicated tokens, and inconsistent enforcement across tasks.

Context engineering becomes effective only when shared knowledge is persistent, structured, and selectively retrievable.

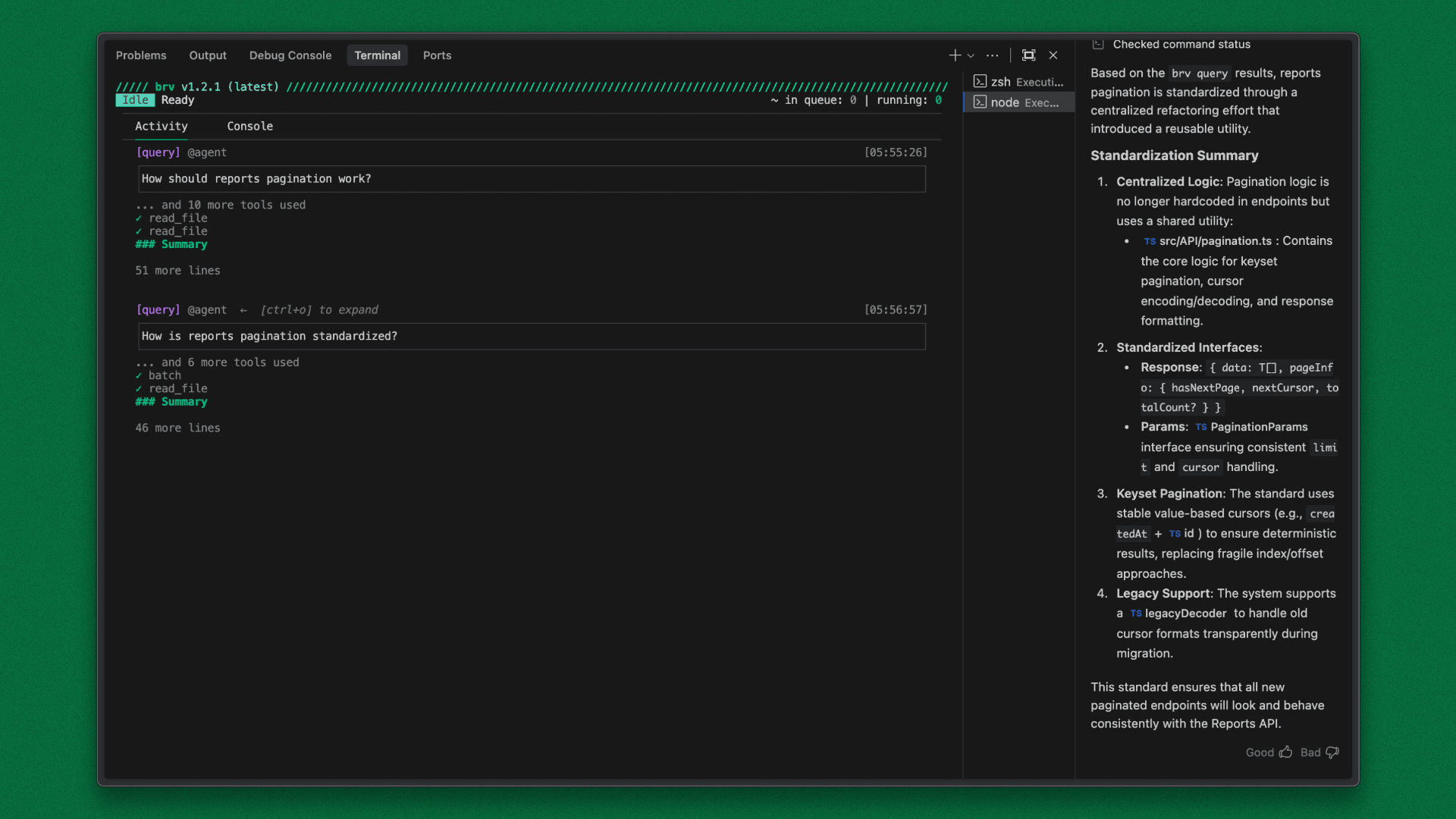

ByteRover: Persistent Context with Selective Retrieval

In systems such as ByteRover, project knowledge is stored as a Context Tree. Architectural conventions, historical decisions, service boundaries, and code ownership rules are captured once and reused across tasks.

Instead of injecting all context into every execution, agents retrieve only the branches relevant to the task they are performing. This shifts context from being redeclared to being referenced.

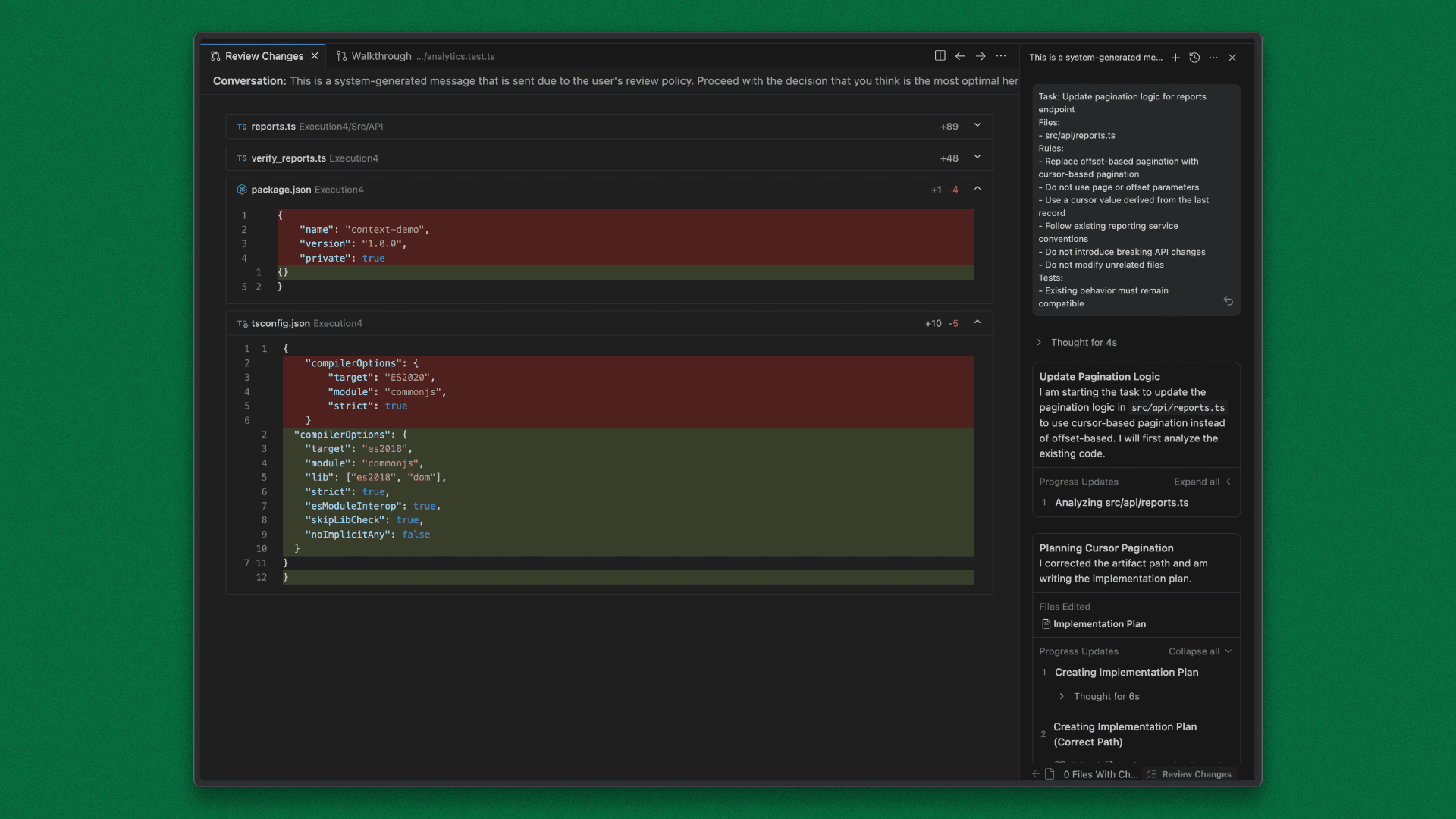

Concrete Example: Pagination Logic Update

Consider a repository with multiple pagination implementations, including deprecated offset-based logic and current cursor-based standards.

Without ByteRover

In the first execution, pagination rules and exclusions were encoded directly into the task prompt. The task explicitly specified which pagination strategy to use, which patterns to avoid, and which files should remain untouched.

The resulting execution required a long task definition and produced a broad set of changes. In addition to updating the target file, the agent introduced verification scripts and modified configuration files as a defensive measure. These changes were functionally correct, but expanded the scope of the diff beyond what the task strictly required.

This behavior illustrates how, in the absence of an externalized context, the agent compensates by adding structure and safeguards to ensure correctness.

With ByteRover

In the second execution, pagination standards and service boundaries already existed as a persistent project context. The task definition was reduced to a concise statement of intent and file scope, without restating pagination rules or exclusions.

Despite the smaller prompt, the agent aligned with the existing pagination standard, avoided deprecated patterns, and confined changes to the intended file. The resulting diff was smaller and more localized, with no additional verification or configuration artifacts introduced.

The contrast between the two executions highlights the effect of context externalization. The difference is not improved prompting discipline, but the presence of reusable project knowledge retrieved automatically at execution time.

Measurable Impact

This approach produces observable, repeatable effects:

Task prompts remain small as the codebase grows

Token usage decreases by avoiding repeated context injection

Agent behavior aligns with historical decisions without restatement

Diffs remain localized and easier to review

This is not improved prompting discipline. It is context externalization and reuse, which is the core of context engineering.

By separating persistent project knowledge from per-task intent, ByteRover enables Antigravity agents to operate with higher signal, lower noise, and controlled token consumption across sustained execution workflows.

Closing

Antigravity changes where AI operates in the development process. Instead of assisting with isolated coding actions, it executes clearly defined units of work under explicit scope, rules, and verification. Plans, diffs, and logs are produced as first-class artifacts, allowing intent and outcome to be reviewed directly without reconstructing execution after the fact.

Across the examples in this article, the same pattern emerges. Constraining scope reduces noise. Encoding standards as rules removes review friction. Parallel agents shorten delivery when boundaries are clear. Deliberate context control, supported by ByteRover, limits unnecessary reasoning and token usage. Together, these mechanisms make agent driven development predictable and reviewable, which ultimately determines whether it can be used reliably in production environments.